Percona XtraDB Multi-Master Replication cluster setup between 3 nodes

This guide describes the steps to establish a Percona XtraDB Cluster v8.0 among three Ubuntu 22.04 nodes.

Install Percona XtraDB Cluster on all hosts that you are planning to use as cluster nodes and ensure you have root access to the MySQL server on each node. In this setup, Multi-Master replication is implemented.

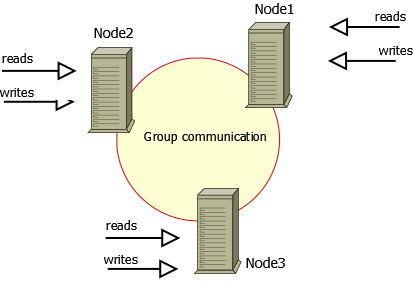

In Multi-Master replication, there are multiple nodes acting as master nodes. Data is replicated between nodes, allowing updates and insertions on a group of master nodes, resulting in multiple copies of the data.

| Node | IP |

|---|---|

| node1 | 172.16.0.12 |

| node2 | 172.16.0.13 |

| node3 | 172.16.0.14 |

Execute installation commands in all 3 nodes.

apt update

apt install -y wget gnupg2 lsb-release curl

wget https://repo.percona.com/apt/percona-release_latest.generic_all.deb

dpkg -i percona-release_latest.generic_all.deb

apt update

percona-release setup pxc80

apt install percona-xtradb-cluster

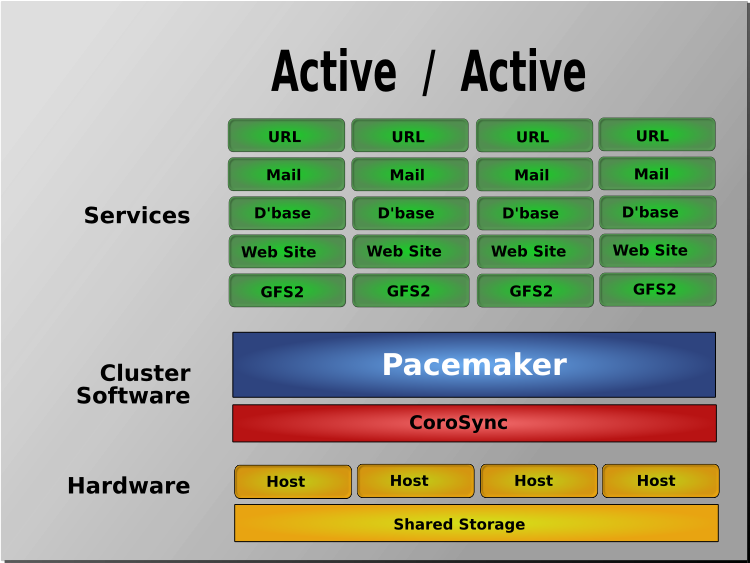

Encrypt PXC Traffic

There are two kinds of traffic in Percona XtraDB Cluster: client-server traffic (the one between client applications and cluster nodes), and replication traffic, which includes SST, IST, write-set replication, and various service messages.

Percona XtraDB Cluster supports encryption for all types of traffic. Replication traffic encryption can be configured either automatically or manually. In this guide, we are configuring automatic version.

SST (State Snapshot Transfer) is the full copy of data from one node to another. It’s used when a new node joins the cluster and needs to transfer data from an existing node.

IST (Incremental State Transfer) is a functionality which, instead of transferring the whole state snapshot, catches up with the group by receiving the missing writesets, but only if the writeset is still in the donor’s writeset cache.

Encrypt Relication Traffic

Replication traffic refers to the inter-node traffic, which includes SST, IST, and regular replication traffic.

Percona XtraDB Cluster supports a single configuration option to secure the entire replication traffic, often referred to as SSL automatic configuration. Alternatively, you can configure the security of each channel by specifying independent parameters.

The automatic SSL encryption configuration requires key and certificate files. MySQL generates default key and certificate files and places them in the data directory.

Percona XtraDB Cluster includes the pxc-encrypt-cluster-traffic variable, enabling automatic SSL encryption for SST, IST, and replication traffic.

By default, pxc-encrypt-cluster-traffic is enabled, ensuring a secured channel for replication. This variable is not dynamic and cannot be changed at runtime.

If you wish to disable encryption for replication traffic, you must stop the cluster and update the [mysqld] section of the configuration file on each node with pxc-encrypt-cluster-traffic=OFF. Then, restart the cluster.

But in our case we are not disabling the encryption traffic between the nodes so follow the below steps. Update/Add these variables in /etc/mysql/mysql.conf.d/mysqld.cnf file.

wsrep_node_name=node1

wsrep_node_address=172.16.0.12

pxc_strict_mode=ENFORCING

wsrep_provider=/usr/lib/galera4/libgalera_smm.so

wsrep_cluster_name=pxc-cluster

wsrep_cluster_address=gcomm://172.16.0.12,172.16.0.13,172.16.0.14

Similary add/update above variables in other 2 nodes too. wsrep_node_name and wsrep_node_address variables only need to be updated as per node. Other parameters remain same.

Now Bootstrap the first node. After you configure all PXC nodes, initialize the cluster by bootstrapping the first node. The initial node must contain all the data that you want to be replicated to other nodes.

systemctl start mysql@bootstrap.service

When you start the node using the previous command, it runs in bootstrap mode with wsrep_cluster_address=gcomm://. This tells the node to initialize the cluster with wsrep_cluster_conf_id variable set to 1. After you add other nodes to the cluster, you can then restart this node as normal, and it will use standard configuration again.

To make sure that the cluster has been initialized, run the following:

show status like 'wsrep%';

The output shows that the cluster size is 1 node, it is the primary component, the node is in the Synced state, it is fully connected and ready for write-set replication.

Now copy the certs from node1 to other 2 remaining nodes. Certs will be in path /var/lib/mysql.

It is important that your cluster use the same SSL certificates and keys on all nodes.

bdn@node02:~$ ls /var/lib/mysql/*pem

/var/lib/mysql/ca-key.pem /var/lib/mysql/client-key.pem /var/lib/mysql/server-cert.pem

/var/lib/mysql/ca.pem /var/lib/mysql/private_key.pem /var/lib/mysql/server-key.pem

/var/lib/mysql/client-cert.pem /var/lib/mysql/public_key.pem

To verify that the server and client certificates are correctly signed by the same CA certificate, run the following command:

bdn@node02:/var/lib/mysql$ openssl verify -CAfile ca.pem server-cert.pem client-cert.pem

server-cert.pem: OK

client-cert.pem: OK

By default, it generates certificates valid for 10 years.

bdn@node02:/var/lib/mysql$ openssl x509 -enddate -noout -in server-cert.pem

notAfter=Apr 17 13:36:50 2034 GMT

Now start mysql in second node.

systemctl start mysql

Similary, start mysql service in third node too.

Now you can stop the mysql@bootstrap.service service in node1 and start mysql service

Now all 3 nodes are connected to the cluster.

You will see similar logs in /var/log/mysql/error.log file.

2024-04-21T17:21:17.442573Z 1 [Note] [MY-000000] [Galera] ===========================================

=====

View:

id: 0c233cf9-fe50-11ee-a3e9-dfbfab2fa1fe:31

status: primary

protocol_version: 4

capabilities: MULTI-MASTER, CERTIFICATION, PARALLEL_APPLYING, REPLAY, ISOLATION, PAUSE, CAUSAL_READ

, INCREMENTAL_WS, UNORDERED, PREORDERED, STREAMING, NBO

final: no

own_index: 0

members(3):

0: 60c1bc1f-0003-11ef-bae7-7347836516bcb, node01

1: 7b9841ae-0003-11ef-8586-4b8504592b099, node02

2: 8f92b85a-0003-11ef-b411-5a2ad6404a18f, node03

Now, any changes made to any MySQL server will automatically be reflected on the other nodes.

Comments